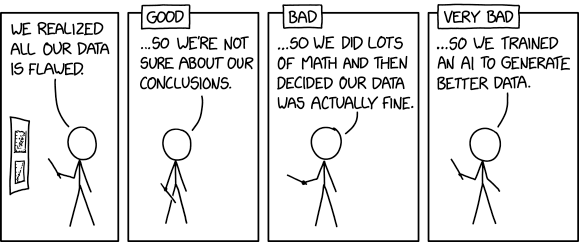

Flawed Data

We trained it to produce data that looked convincing, and we have to admit the results look convincing!

We trained it to produce data that looked convincing, and we have to admit the results look convincing!

This is another comic about what is the right or wrong way to perform research when data are not adequate.

In the first frame, Cueball presents a report on a poster (two graphs with data points and possible fitted curves), admitting that all of the data anre actually flawed. He doesn't explain if it's contrary to some outcome or revelation, or perhaps a systematic error in the data-gathering process.

From there, three different reactions to this is displayed in order of how good a decision they make based on this realization.

- Good

In the first scenario Cueball states they are no longer sure about the conclusions they had drawn from the flawed data. This is, of course, the scientifically appropriate decision. The less reliable data are, the less reliable the conclusions that can be drawn. Ideally, flawed data would be discarded altogether, but there are situations in which better data are not available, so a compromise may be to draw tentative conclusions, but make clear that those are uncertain, due to issues with the data.

- Bad

In the second scenario Cueball then explains that after heavy manipulation ("doing a lot of math") of their flawed data, they decided they were actually fine. There are a number of methods that can be used to manipulate or "clean" data, with varying levels of complexity and reliability. Some of these methods may be valid in certain situations, but applying them after the initial analysis failed is highly suspect. The likelihood, in such a case, is that the researchers tried different methods of data manipulation, one after another, until they found one that gave the results they wanted. This is clearly highly subject to the biases of the researchers (both conscious and unconscious) and is much less likely to result in accurate conclusions. Hence, this approach occurs in research more often than it should, and Randall is making clear that it's "bad".

- Very bad

In the third and final scenario, Cueball explains that they scrapped all the flawed data. However, instead of trying to make some new data by correctly redoing research/measurements/tests, they instead trained an Artificial Intelligence (AI) to generate better data from nothing but a desire to match a target outcome. These are of course not real data,[citation needed] but just a simulation of data, selectively sieving statistical noise for desirable qualities. And since they are probably looking for a specific result, they are training the AI to generate data that support this. This approach is "very bad", as it not only produces no useful science, but means that future researchers will be working from entirely artificial data. Doing so would be destructive to science and would be considered incredibly unethical in any research body or association. The only purpose of such a method would be to convince others that you'd proven something interesting, rather than determining what's true (and possibly gain some experience in AI programming). AI is a recurring theme on xkcd.

In the title text, the results from the very bad approach are mentioned and the fact that they got the data they were looking for is made clear when they state that We trained it to produce data that looked convincing, and we have to admit, the results look convincing! The AI was of course trained to provide data that look convincing, which is why they are so convinced of the results.