Confounding Variables

You can find a perfect correlation if you just control for the residual.

You can find a perfect correlation if you just control for the residual.

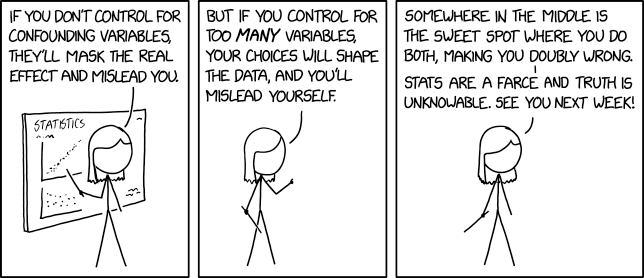

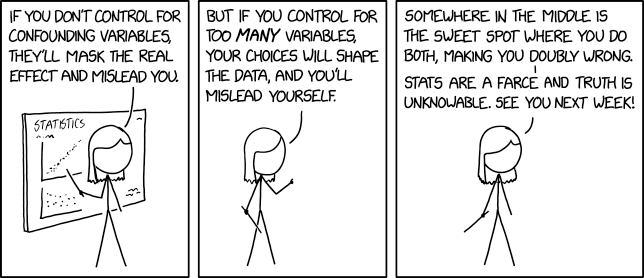

Miss Lenhart is teaching a course which apparently covers at least an overview of statistics.

In statistics, a confounding variable is a third variable that's related to the independent variable, and also causally related to the dependent variable. An example is that you see a correlation between sunburn rates and ice cream consumption; the confounding variable is temperature: high temperatures cause people go out in the sun and get burned more, and also eat more ice cream.

One way to control for a confounding variable by restricting your data-set to samples with the same value of the confounding variable. But if you do this too much, your choice of that "same value" can produce results that don't generalize. Common examples of this in medical testing are using subjects of the same sex or race -- the results may only be valid for that sex/race, not for all subjects.

There can also often be multiple confounding variables. It may be difficult to control for all of them without narrowing down your data-set so much that it's not useful. So you have to choose which variables to control for, and this choice biases your results.

In the final panel, Miss Lenhart suggests a sweet spot in the middle, where both confounding variables and your control impact the end result, thus making you "doubly wrong". "Doubly wrong" result would simultaneously display wrong correlations (not enough of controlled variables) and be too narrow to be useful (too many controlled variables), thus the 'worst of both worlds'.

Finally she admits that no matter what you do the results will be misleading, so statistics are useless. This would seem to be an unexpected declaration from someone supposedly trying to actually teach statistics[citation needed], and expecting her students to continue the course. Though there is a possibility that she is not there to purely educate this subject, but is instead running a course with a different purpose and it just happens that this week concluded with this particular targeted critique.

In the title text, the residual refers to the difference between any particular data point and the graph that's supposed to describe the overall relationship. The collection of all residuals is used to determine how well the line fits the data. If you control for this by including a variable that perfectly matches the discrepancies between the predicted and actual outcomes, you would have a perfectly-fitting model: however, it is nigh impossible (especially in the social and behavioral sciences) to find a "final variable" that perfectly provides all the "missing pieces" of the prediction model.